Is this the future of 3D imaging?

Recently, there's been a buzz around a new technology called "Neural Radiance Fields" or NeRFs. As a method of 3D scanning objects, they're similar to photogrammetry, but they use machine learning algorithms to calculate the different viewpoints of an object. But what exactly are NeRFs, and what makes them so special?

In simple terms, NeRFs allow for the creation of 3D models of objects by capturing the different viewpoints of an object and using machine learning to approximate the points in between to produce a highly accurate representation of it. This is similar to photogrammetry, which uses a series of photographs of an object, and the common points between them to create a 3D model.

So, what sets NeRFs apart from photogrammetry? Well, the main thing being they're capable of reproducing reflective surfaces with incredible accuracy, something that photogrammetry struggles with.

The photogrammetry result is on the left. The NeRF is on the right.

With these advancements in mind, it's not surprising to ask the question - will NeRFs replace photogrammetry? To answer this question, let's compare the performance of both methods under different circumstances. For this comparison, I'll use the photogrammetry app Polycam and the NeRFs app Luma AI.

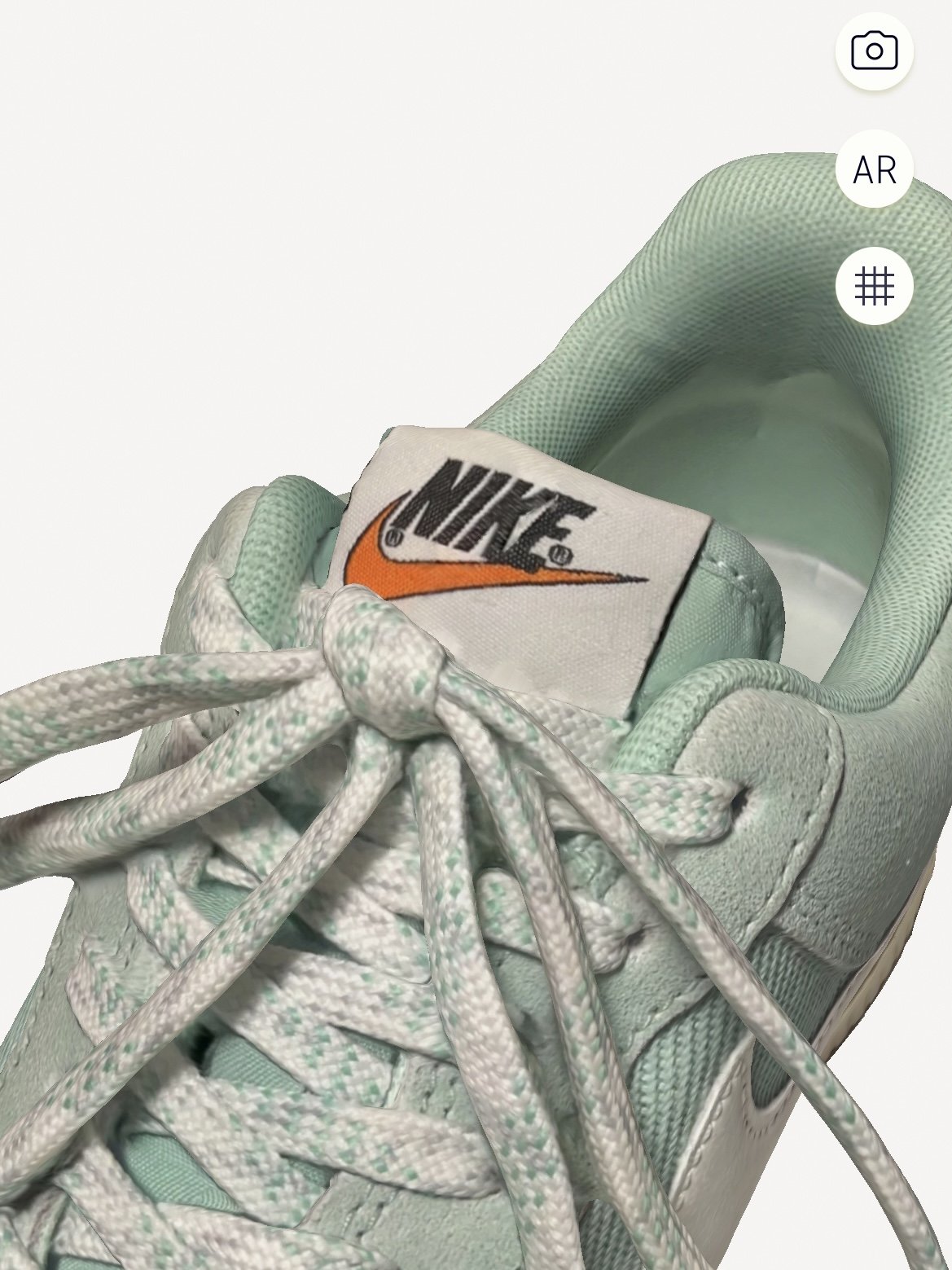

To test both methods, I'll be using a shoe as our subject. I'll scan the shoe using both Polycam and Luma AI to see how they perform.

Polycam Photogrammetry Result:

Luma AI NeRF

The results are impressive, but this isn’t surprising for a well-lit object with soft, matted surfaces. But one important distinction is the level of quality and colour reproduction. It’s worth saying results may differ from capture to capture, and it’s important with both subjects to go very slowly with your process to get the best results. That being said, you can see that the detail quality and colour reproduction are quite a bit more accurate on the photogrammetry results than the NeRF. Especially visible in the orange Nike swoosh.

So, what does this comparison tell us about the future of photogrammetry and NeRFs? It's safe to say that NeRFs represent a significant leap in technology that has the potential to change the way we view and interact with objects in the digital world. However, it's too early to predict the end of photogrammetry just yet. Photogrammetry has been around for decades and has been a reliable method of creating 3D models, and it's likely to stick around for the time being.

What's most exciting about NeRFs is the potential they have to impact the world in ways I can't even imagine yet. With this new technology in its infancy, the future is bright, and I’m eager to see how it develops and evolves. The advancements in NeRFs technology have opened up new possibilities in 3D scanning and modeling, and it will be fascinating to see how it changes the way we interact with and experience the world of 3D, be that in VFX, game design, 3D art, and even in advancements in the metaverse.

In conclusion, while NeRFs may represent a significant step forward in 3D scanning and modeling technology, it's too early to say whether they will replace photogrammetry. Both methods have their strengths and weaknesses, and it's up to the individual user to determine which way works best for them. But one thing is for sure - the future of 3D is looking bright, and I’m eager to see how it develops in the coming years.